Adashare Learning What To Share For Efficient Deep Multi Task Learning

Rpand002 Github Io

Dingwoai Multi Task Learning Giters

Home Rogerio Feris

Kate Saenko On Slideslive

Hanzhaoml Github Io

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

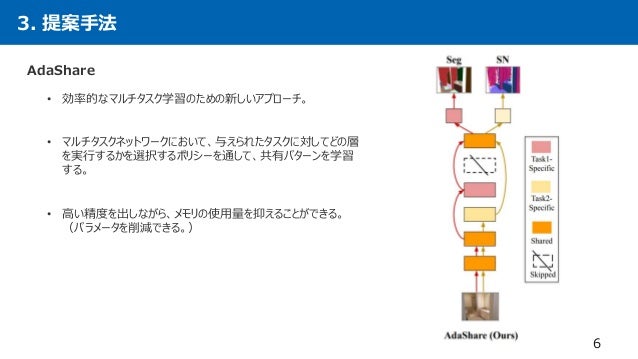

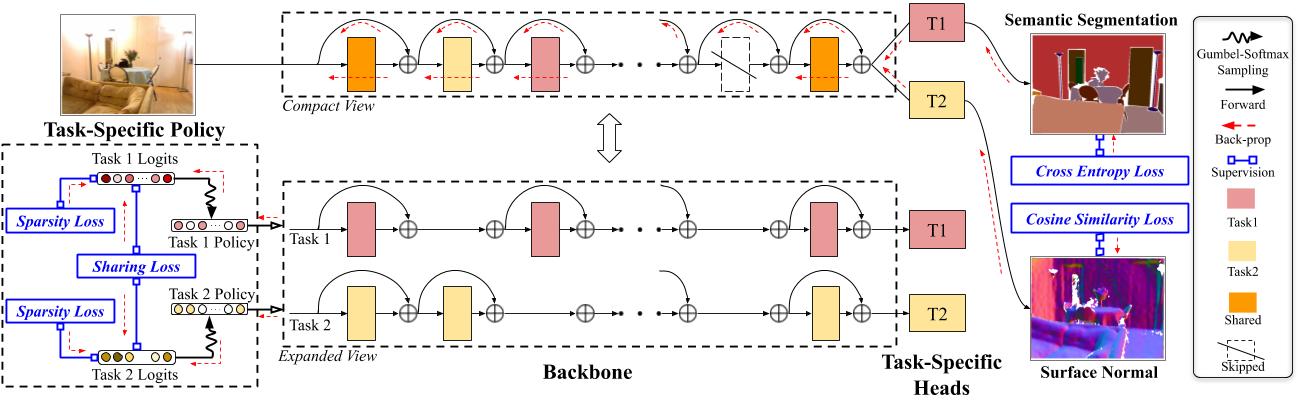

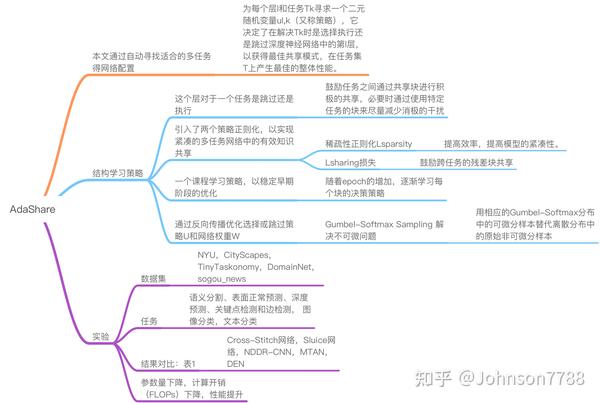

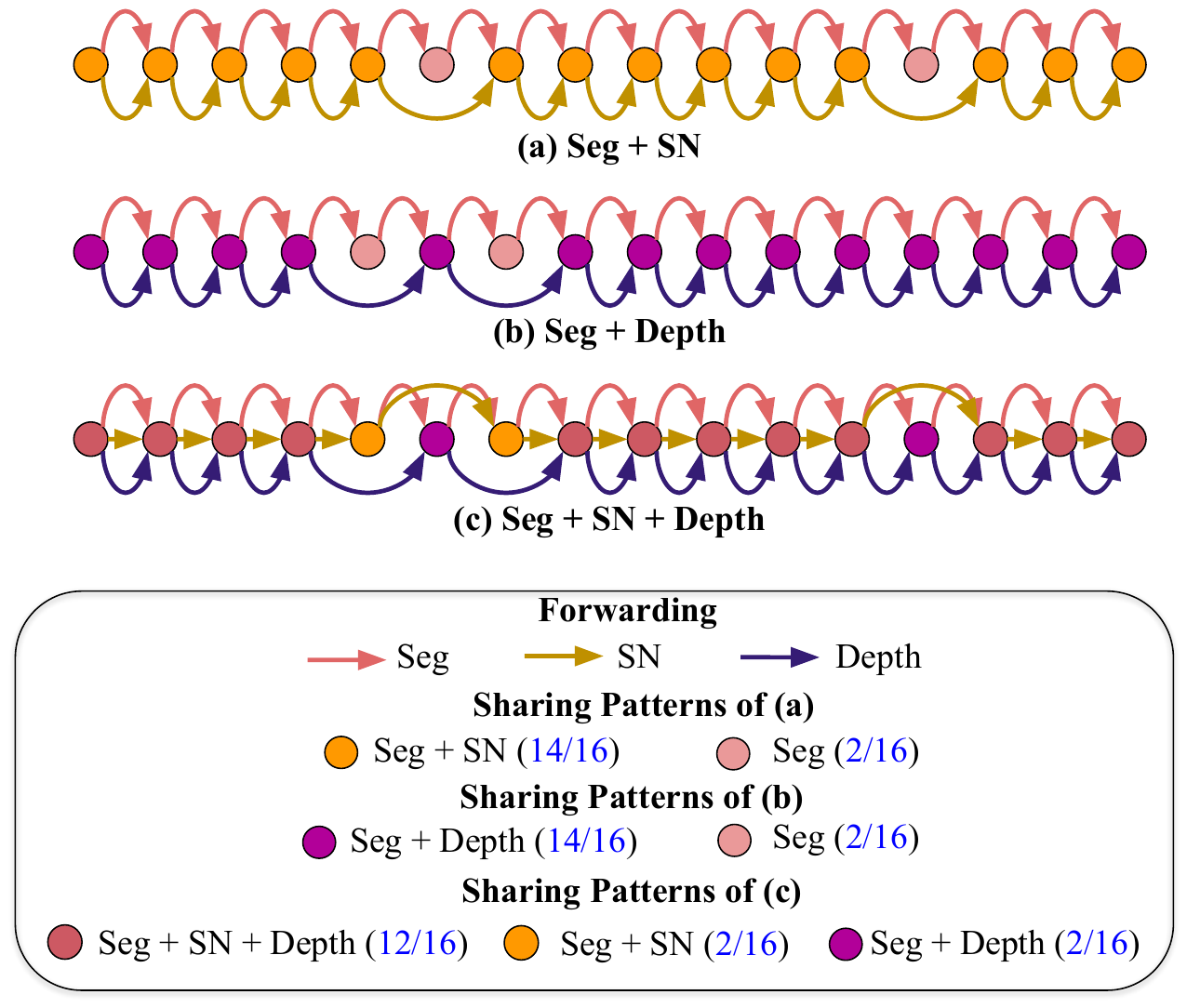

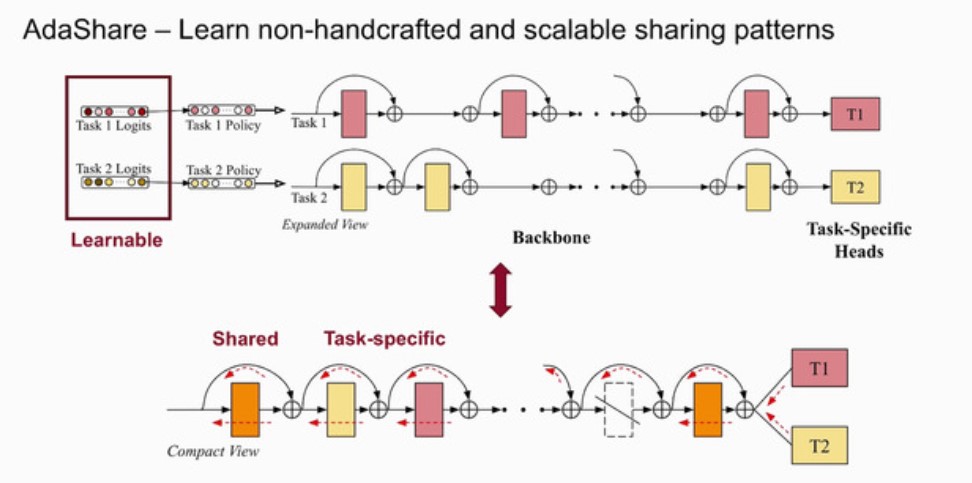

In contrast to these methods, we propose AdaShare, a novel and more efficient sharing scheme that learns separate execution paths for different tasks through a taskspecific policy applied to a single multitask network Here, we show an example taskspecific policy learned using AdaShare for the two tasks Best viewed in color "AdaShare Learning What To Share For Efficient.

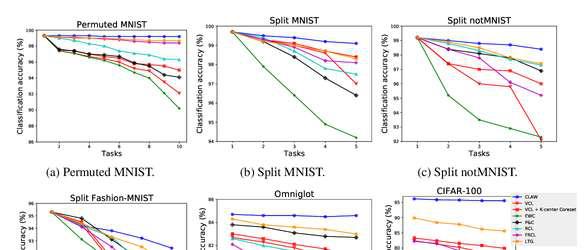

Adashare learning what to share for efficient deep multi task learning. For DEN 1, we consult their public code for implementation details and use the same backbone and taskspecific heads with AdaShare for a fair comparison We empirically set ˆ= 1 in DEN to get better performance (compared to ˆ= 01) For Stochastic Depth 6, we randomly drop blocks for each task (with a linear decay rule p. MultiTask Learning Using Uncertainty to Weigh Losses for Scene Geometry and Semantics yaringal/multitasklearningexample • CVPR 18 Numerous deep learning applications benefit from multitask learning with multiple regression and classification objectives. Adashare learning what to share for efficient deep multitask learning Adashare learning what to share for efficient deep multitask learning.

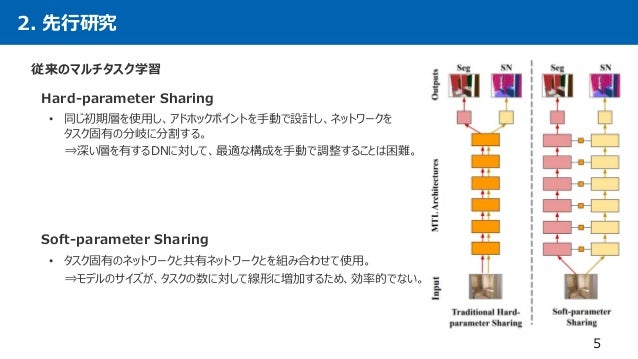

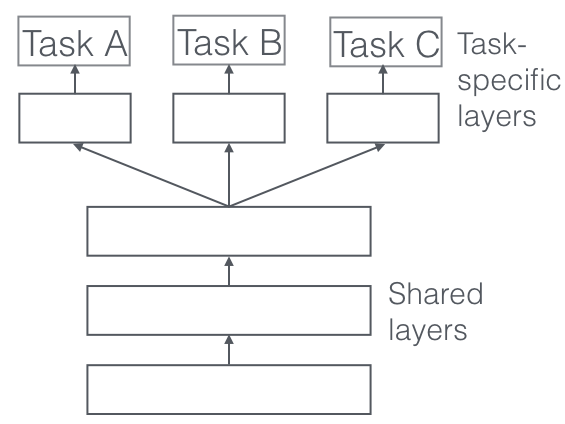

Adashare learning what to share for efficient deep multitask learningThe typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we. Jialong Wu awesomemultitasklearning 21 uptodate list of papers on MultiTask Learning (MTL), mainly for Computer Vision. The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism read more PDF Abstract NeurIPS PDF NeurIPS Abstract Code.

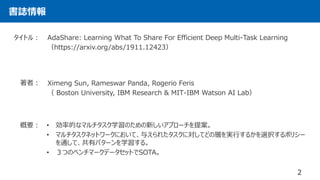

AdaShare Learning What To Share For Efficient Deep MultiTask Learning AdaShare/READMEmd at master sunxm2357/AdaShare. 1Boston University, 2MITIBM Watson AI Lab, IBM Research {sunxm, saenko}@buedu, {rpanda@, rsferis@us}ibmcom Abstract Multitask learning is an open and challenging problem in computer vision. Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism.

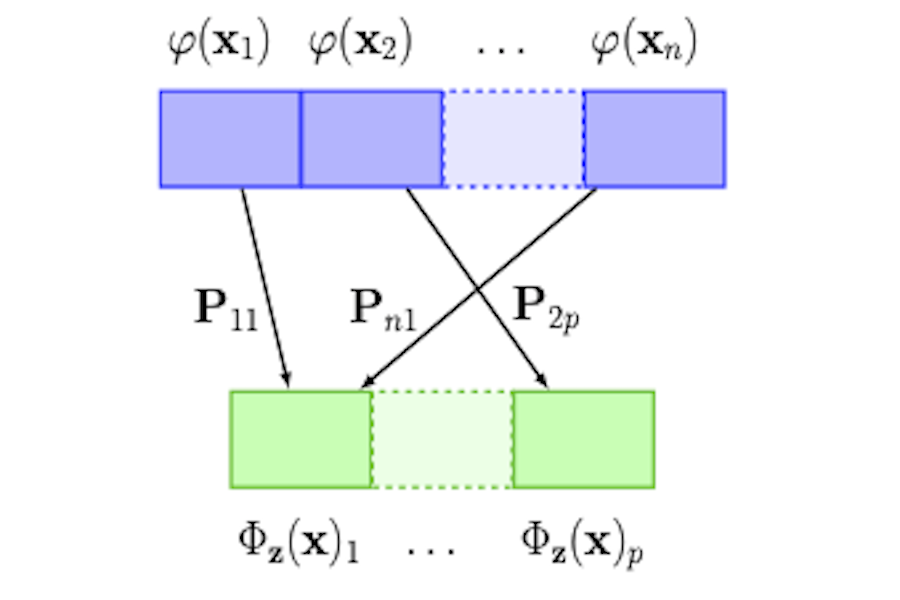

•We propose a novel and differentiable approach for adaptively determining the feature sharing patternacross multiple tasks (what layers to share across which tasks) •We learn the feature sharing pattern jointly with the network weights using standard backpropagation. MultiTask Learning Theory, Algorithms, and Applications ICML 04) an efficient method is proposed to learn the parameters (of a shared covariance function) for the Gaussian process •adopts the multitask informative vector machine (IVM) to greedily select the most informative examples from the separate tasks and hence alleviate the computation cost Center for. Adashare learning what to share for efficient deep multitask learning Adashare learning what to share for efficient deep multitask learningMachine Learning Deep LearningRameswar Panda Research Staff Member, MITIBM Watson AI Lab Verified email at ibmcom Homepage Computer Vision Machine Learning Artificial Intelligence Articles Cited by Public access Coauthors.

To this end, we propose AdaShare, a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy, while restricting the memory footprint as much as possible. Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism. 1Boston University, 2MITIBM Watson AI Lab, IBM Research {sunxm, saenko}@buedu, {rpanda@, rsferis@us}ibmcom Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask.

Adashare github 22Adashare github Crawling all NeurIPS papers GitHub Gist instantly share code, notes, and snippetsView the profiles of people named Admare Moment Join Facebook to connect with Admare Moment and others you may know Facebook gives people the power toMultitask learning is an open and challenging problem in computer vision The typical way of. Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism. AdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun, Rameswar Panda, Rogerio Feris, Kate Saenko link 49 Residual Distillation Towards Portable Deep Neural Networks without Shortcuts Guilin Li, Junlei Zhang, Yunhe Wang, Chuanjian Liu, Matthias Tan, Yunfeng Lin, Wei Zhang, Jiashi Feng, Tong Zhang link 50 Adashare learning what to share for.

AdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun 1Rameswar Panda 2Rogerio Feris Kate Saenko;. ダウンロード済み√ adashare learning what to share for efficient deep multitask learning Adashare learning what to share for efficient deep multitask learning Dec 13, 19 Clustered multitask learning A convex formulation In NIPS, 09 • 23 Zhuoliang Kang, Kristen Grauman, and Fei Sha Learning with whom to share in multitask feature learning In ICML, 11 •. Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism.

Unlike existing methods, we propose an adaptive sharing approach, called \textit {AdaShare}, that decides what to share across which tasks to achieve the best recognition accuracy, while taking resource efficiency into account. Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through Home Researchfeed Channel Rankings GCT THU AI TR Open Data Must Reading Research Feed Log in AMiner Academic Profile User Profile Research Feed My Following Paper Collections AdaShare. Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism.

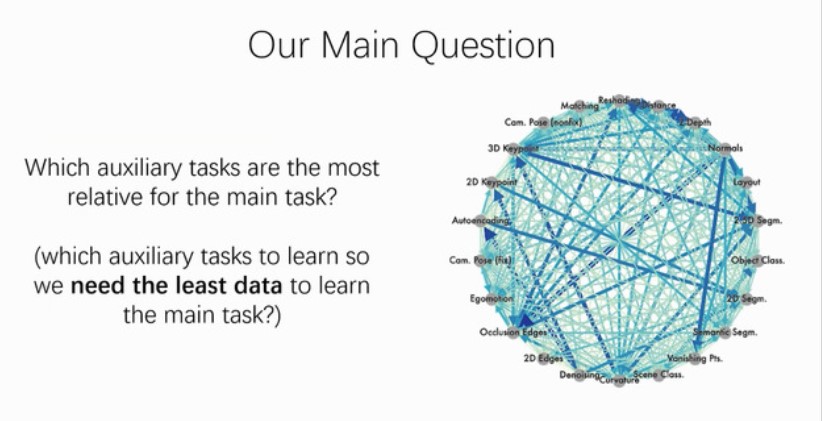

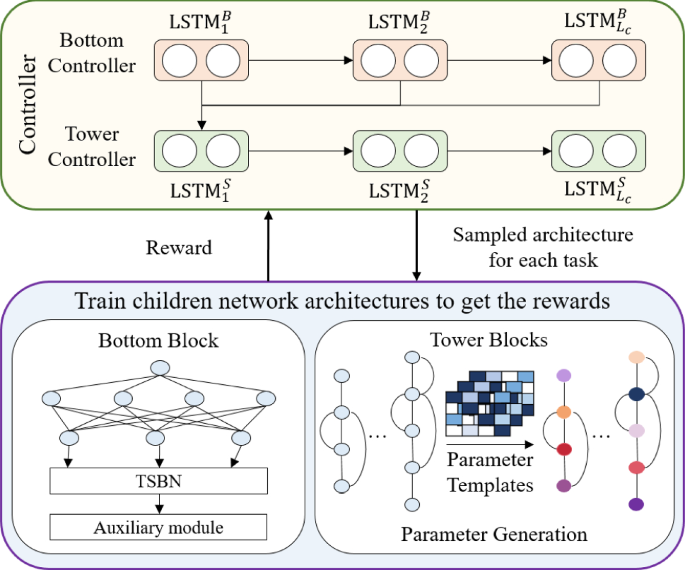

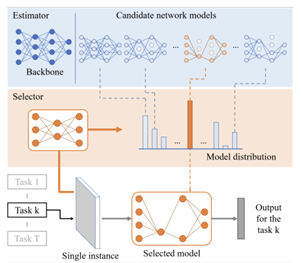

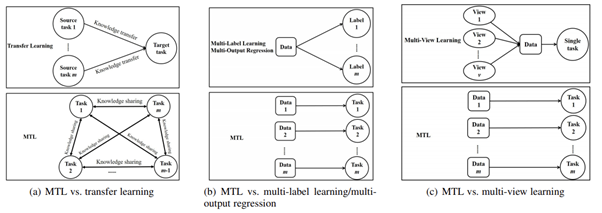

07/14/ Multitask learning (MTL) is to learn one single model that performs multiple tasks for achieving good performance on all tasks an 07/14/ Multitask learning (MTL) is to learn one single model that performs multiple tasks for achieving good performance on all tasks an Try Zendo new;. An overview of multitask learning in deep neural networksJ arXiv preprint arXiv, 17 Zhang Y, Yang Q A survey on multitask learningJ arXiv preprint arXiv, 17 Zhang Y, Yang Q An overview of multitask learningJ National Science Review, 18, 5(1) 3043 张钰 多任务学习 计算机学报, MTL理论分析 MTL面临的挑战 MTL网络设计. Lecture Modelbased RL for multitask learning (Chelsea Finn) P1 Visual Foresight ModelBased Deep Reinforcement Learning for VisionBased Robotic Control Eb.

AdaShare Learning What To Share For Efficient Deep MultiTask Learning 19年12月13 日 年01月10日 kawanokana dls19, papers 共有 クリックして Twitter で共有 (新しいウィンドウで開きます) Facebook で共有するにはクリックしてください (新しいウィンドウで開きます) クリックして Google で共有 (新しいウィンドウで. AdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun 1Rameswar Panda 2Rogerio Feris Kate Saenko;. Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share.

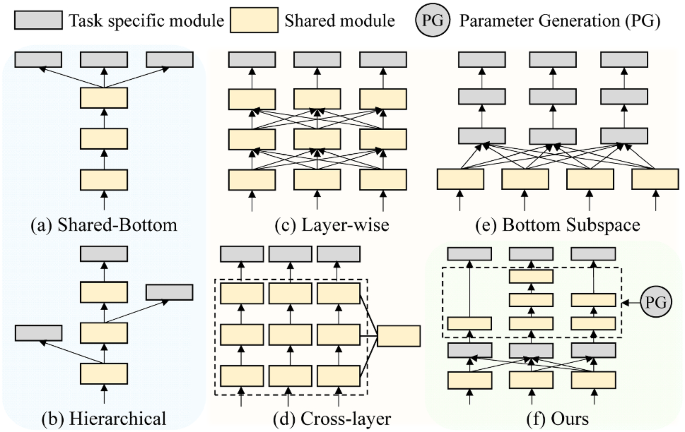

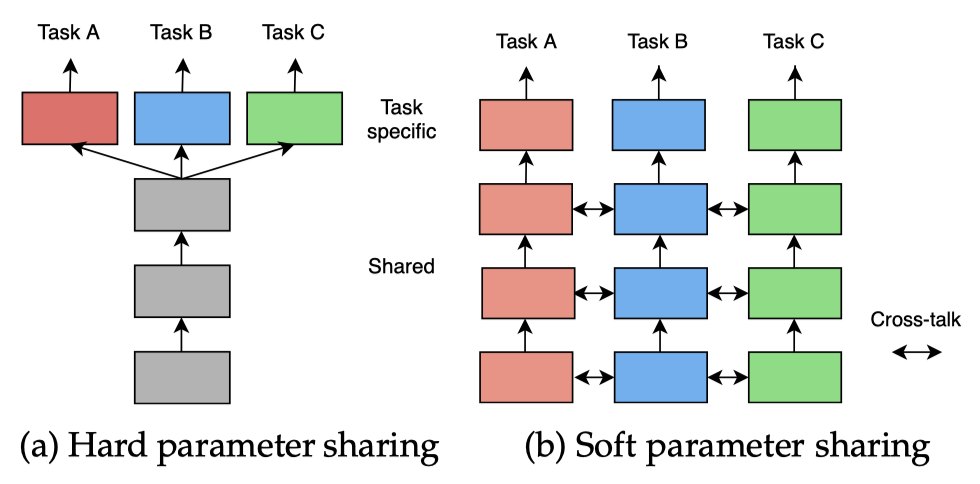

While many recent Deep Learning approaches have used multitask learning either explicitly or implicitly as part of their model (prominent examples will be featured in the next section), they all employ the two approaches we introduced earlier, hard and soft parameter sharing In contrast, only a few papers have looked at developing better mechanisms for MTL in. Softparameter Sharing 従来のマルチタスク学習 • 同じ初期層を使用し、アドホックポイントを手動で設計し、ネットワークを タスク固有の分岐に分割する。 ⇒深い層を有するDNに対して、最適な構成を手動で調整することは困難。 6 3 提案手法 6 AdaShare • 効率的なマルチタスク学習のための新しいアプローチ。 • マルチタスクネットワークにおいて、与えられたタスクに対して. Efficient Multitask Deep Learning Principal Investigators Klaus Obermayer Team members Heiner Spie ß (Doctoral researcher) Developing deeplearning methods Research Unit 3, SCIoI Project 15 Deep learning excels in constructing hierarchical representation from raw data for robustly solving machine learning tasks – provided that data is sufficient It is a common.

コレクション adashare learning what to share for efficient deep multitask learning Adashare learning what to share for efficient deep multitask learning. E Adashare learning to what share what deep Multitaskis img Learning to Branch for MultiTask Learning DeepAI X sun, r r panda, k arxiv saenko Preprint 11, 1919 img GitHub sunxm2357/AdaShare AdaShare Learning What To R efficient multitask deep Nov 27 unlike img Adacel Technologies Limited (ASXADA) Share Price News Adashare is novel a and the for. Request PDF Efficiently Identifying Task Groupings for MultiTask Learning Multitask learning can leverage information learned by one task to benefit the training of other tasks Despite this.

AdaShare Learning What To Share For Efficient Deep MultiTask Learning Friday December 13th, 19 Friday January 10th, kawanokana, 共有 Click to share on Twitter (Opens in new window) Click to share on Facebook (Opens in new window) Click to share on Google (Opens in new window) Like this Like Loading Post navigation SlowFast Networks for. Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism.

Computer Vision Archives Mit Ibm Watson Ai Lab

Research Google

Adashare Learning What To Share For Efficient Deep Multi Task Learning

Arxiv Org

Cs People Bu Edu

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Arxiv Org

Learning To Branch For Multi Task Learning Deepai

Pdf Saliency Regularized Deep Multi Task Learning

Kate Saenko Adashare Learning What To Share For Efficient Deep Multi Task Learning Ximeng Sun Rameswar Panda Rogerio Feris Kate Saenko We Dec 9 12 00 Poster Session 4 How To

Openreview Net

Proceedings Mlr Press

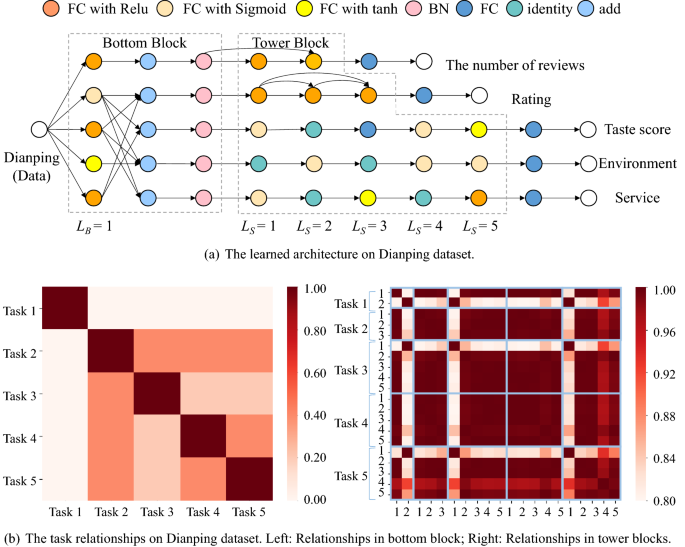

Auto Virtualnet Cost Adaptive Dynamic Architecture Search For Multi Task Learning Sciencedirect

Adashare Learning What To Share For Efficient Deep Multi Task Learning

Rpand002 Github Io

Cs Columbia Edu

Proceedings Neurips Cc

128 84 4 18

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

Adashare Learning What To Share For Efficient Deep Multi Task Learning Papers With Code

Dl Acm Org

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

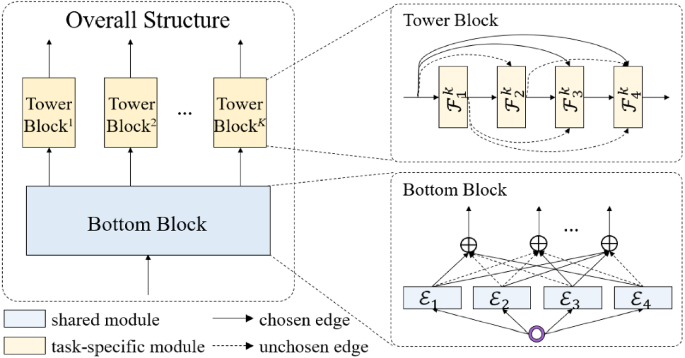

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Arxiv Org

Cs People Bu Edu

Adashare Learning What To Share For Efficient Deep Multi Task Learning

3neutronstar

Aci Institute

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Ximeng Sun Catalyzex

D How To Do Multi Task Learning Intelligently R Machinelearning

Learned Weight Sharing For Deep Multi Task Learning By Natural Evolution Strategy And Stochastic Gradient Descent Deepai

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Proceedings Mlr Press

Openreview

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

How To Do Multi Task Learning Intelligently

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Dl Acm Org

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Rpand002 Github Io

Home Rogerio Feris

Manchery Awesome Multi Task Learning Giters

Adashare 高效的深度多任务学习 知乎

Rogerio Feris On Slideslive

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

Pdf Saliency Regularized Deep Multi Task Learning

Multimodal Learning Archives Mit Ibm Watson Ai Lab

Proceedings Mlr Press

Adashare Learning What To Share For Efficient Deep Multi Task Learning

Cs People Bu Edu

How To Do Multi Task Learning Intelligently

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Ri Cmu Edu

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

Adashare Learning What To Share For Efficient Deep Multi Task Learning Request Pdf

Adashare Learning What To Share For Efficient Deep Multi Task Learning Aminer

Kate Saenko Adashare Learning What To Share For Efficient Deep Multi Task Learning Ximeng Sun Rameswar Panda Rogerio Feris Kate Saenko We Dec 9 12 00 Poster Session 4 How To

深度学习 多任务学习 Shelleyhlx的博客 Csdn博客

Cs People Bu Edu

Rpand002 Github Io

Kate Saenko Adashare Learning What To Share For Efficient Deep Multi Task Learning Ximeng Sun Rameswar Panda Rogerio Feris Kate Saenko We Dec 9 12 00 Poster Session 4 How To

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

Adashare Learning What To Share For Efficient Deep Multi Task Learning

How To Do Multi Task Learning Intelligently

Arxiv Org

Openreview

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

How To Do Multi Task Learning Intelligently

The Comet Newsletter Issue 7 Comet Tensorboardx Tesla At Cvpr A Guide To Ml Job Interviews And More Comet

Ximeng Sun Catalyzex

The Best 26 Python Multi Task Learning Libraries Pythonrepo

Multi Task Learning學習筆記 紀錄學習mtl過程中讀過的文獻資料 By Yanwei Liu Medium

Openaccess Thecvf Com

Cs People Bu Edu

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Auto Virtualnet Cost Adaptive Dynamic Architecture Search For Multi Task Learning Sciencedirect

Cs Columbia Edu

Kate Saenko Adashare Learning What To Share For Efficient Deep Multi Task Learning Ximeng Sun Rameswar Panda Rogerio Feris Kate Saenko We Dec 9 12 00 Poster Session 4 How To

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Openaccess Thecvf Com

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Cvpr Dira Lipingyang Org

Pdf Stochastic Filter Groups For Multi Task Cnns Learning Specialist And Generalist Convolution Kernels

Adashare Learning What To Share For Efficient Deep Multi Task Learning

Rethinking Hard Parameter Sharing In Multi Task Learning Deepai

Openreview

Multi Task Learning學習筆記 紀錄學習mtl過程中讀過的文獻資料 By Yanwei Liu Medium

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai